KeplerMapper & NLP examples¶

Last run 2021-10-08 with kmapper version 2.0.1

Newsgroups20¶

[1]:

# from kmapper import jupyter

import kmapper as km

from kmapper import Cover, jupyter

import numpy as np

from sklearn.datasets import fetch_20newsgroups

from sklearn.cluster import AgglomerativeClustering

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import TruncatedSVD

from sklearn.manifold import Isomap

from sklearn.preprocessing import MinMaxScaler

Data¶

We will use the Newsgroups20 dataset. This is a canonical NLP dataset containing 11314 labeled postings on 20 different newsgroups.

[2]:

newsgroups = fetch_20newsgroups(subset='train')

X, y, target_names = np.array(newsgroups.data), np.array(newsgroups.target), np.array(newsgroups.target_names)

print("SAMPLE",X[0])

print("SHAPE",X.shape)

print("TARGET",target_names[y[0]])

SAMPLE From: lerxst@wam.umd.edu (where's my thing)

Subject: WHAT car is this!?

Nntp-Posting-Host: rac3.wam.umd.edu

Organization: University of Maryland, College Park

Lines: 15

I was wondering if anyone out there could enlighten me on this car I saw

the other day. It was a 2-door sports car, looked to be from the late 60s/

early 70s. It was called a Bricklin. The doors were really small. In addition,

the front bumper was separate from the rest of the body. This is

all I know. If anyone can tellme a model name, engine specs, years

of production, where this car is made, history, or whatever info you

have on this funky looking car, please e-mail.

Thanks,

- IL

---- brought to you by your neighborhood Lerxst ----

SHAPE (11314,)

TARGET rec.autos

Projection¶

To project the unstructured text dataset down to 2 fixed dimensions, we will set up a function pipeline. Every consecutive function will take as input the output from the previous function.

We will try out “Latent Semantic Char-Gram Analysis followed by Isometric Mapping”.

TFIDF vectorize (1-6)-chargrams and discard the top 17% and bottom 5% chargrams. Dimensionality = 13967.

Run TruncatedSVD with 100 components on this representation. TFIDF followed by Singular Value Decomposition is called Latent Semantic Analysis. Dimensionality = 100.

Run Isomap embedding on the output from previous step to project down to 2 dimensions. Dimensionality = 2.

MinMaxScale the output from previous step. Dimensionality = 2.

[3]:

mapper = km.KeplerMapper(verbose=2)

projected_X = mapper.fit_transform(X,

projection=[TfidfVectorizer(analyzer="char",

ngram_range=(1,6),

max_df=0.83,

min_df=0.05),

TruncatedSVD(n_components=100,

random_state=1729),

Isomap(n_components=2,

n_jobs=-1)],

scaler=[None, None, MinMaxScaler()])

print("SHAPE",projected_X.shape)

KeplerMapper(verbose=2)

..Composing projection pipeline of length 3:

Projections: TfidfVectorizer(analyzer='char', max_df=0.83, min_df=0.05, ngram_range=(1, 6))

TruncatedSVD(n_components=100, random_state=1729)

Isomap(n_jobs=-1)

Distance matrices: False

False

False

Scalers: None

None

MinMaxScaler()

..Projecting on data shaped (11314,)

..Projecting data using:

TfidfVectorizer(analyzer='char', max_df=0.83, min_df=0.05, ngram_range=(1, 6))

..Created projection shaped (11314, 13967)

..Projecting on data shaped (11314, 13967)

..Projecting data using:

TruncatedSVD(n_components=100, random_state=1729)

..Projecting on data shaped (11314, 100)

..Projecting data using:

Isomap(n_jobs=-1)

..Scaling with: MinMaxScaler()

SHAPE (11314, 2)

Mapping¶

We cover the projection with 10 33%-overlapping intervals per dimension (10*10=100 cubes total).

We cluster on the projection (but, note, we can also create an inverse_X to cluster on by vectorizing the original text data).

For clustering we use Agglomerative Single Linkage Clustering with the “cosine”-distance and 3 clusters. Agglomerative Clustering is a good cluster algorithm for TDA, since it both creates pleasing informative networks, and it has strong theoretical garantuees (see functor and functoriality).

[4]:

from sklearn import cluster

graph = mapper.map(projected_X,

X=None,

clusterer=cluster.AgglomerativeClustering(n_clusters=3,

linkage="complete",

affinity="cosine"),

cover=Cover(perc_overlap=0.33))

Mapping on data shaped (11314, 2) using lens shaped (11314, 2)

Minimal points in hypercube before clustering: 3

Creating 100 hypercubes.

> Found 3 clusters in hypercube 0.

> Found 3 clusters in hypercube 1.

> Found 3 clusters in hypercube 2.

> Found 3 clusters in hypercube 3.

Cube_4 is empty.

> Found 3 clusters in hypercube 5.

> Found 3 clusters in hypercube 6.

> Found 3 clusters in hypercube 7.

> Found 3 clusters in hypercube 8.

> Found 3 clusters in hypercube 9.

> Found 3 clusters in hypercube 10.

> Found 3 clusters in hypercube 11.

> Found 3 clusters in hypercube 12.

> Found 3 clusters in hypercube 13.

> Found 3 clusters in hypercube 14.

> Found 3 clusters in hypercube 15.

> Found 3 clusters in hypercube 16.

> Found 3 clusters in hypercube 17.

> Found 3 clusters in hypercube 18.

> Found 3 clusters in hypercube 19.

> Found 3 clusters in hypercube 20.

Cube_21 is empty.

> Found 3 clusters in hypercube 22.

> Found 3 clusters in hypercube 23.

> Found 3 clusters in hypercube 24.

> Found 3 clusters in hypercube 25.

> Found 3 clusters in hypercube 26.

> Found 3 clusters in hypercube 27.

> Found 3 clusters in hypercube 28.

> Found 3 clusters in hypercube 29.

> Found 3 clusters in hypercube 30.

> Found 3 clusters in hypercube 31.

> Found 3 clusters in hypercube 32.

> Found 3 clusters in hypercube 33.

> Found 3 clusters in hypercube 34.

> Found 3 clusters in hypercube 35.

> Found 3 clusters in hypercube 36.

> Found 3 clusters in hypercube 37.

> Found 3 clusters in hypercube 38.

> Found 3 clusters in hypercube 39.

> Found 3 clusters in hypercube 40.

> Found 3 clusters in hypercube 41.

> Found 3 clusters in hypercube 42.

> Found 3 clusters in hypercube 43.

> Found 3 clusters in hypercube 44.

> Found 3 clusters in hypercube 45.

> Found 3 clusters in hypercube 46.

> Found 3 clusters in hypercube 47.

> Found 3 clusters in hypercube 48.

> Found 3 clusters in hypercube 49.

> Found 3 clusters in hypercube 50.

> Found 3 clusters in hypercube 51.

> Found 3 clusters in hypercube 52.

> Found 3 clusters in hypercube 53.

> Found 3 clusters in hypercube 54.

> Found 3 clusters in hypercube 55.

> Found 3 clusters in hypercube 56.

> Found 3 clusters in hypercube 57.

> Found 3 clusters in hypercube 58.

> Found 3 clusters in hypercube 59.

> Found 3 clusters in hypercube 60.

> Found 3 clusters in hypercube 61.

> Found 3 clusters in hypercube 62.

> Found 3 clusters in hypercube 63.

> Found 3 clusters in hypercube 64.

> Found 3 clusters in hypercube 65.

> Found 3 clusters in hypercube 66.

> Found 3 clusters in hypercube 67.

> Found 3 clusters in hypercube 68.

> Found 3 clusters in hypercube 69.

> Found 3 clusters in hypercube 70.

> Found 3 clusters in hypercube 71.

> Found 3 clusters in hypercube 72.

> Found 3 clusters in hypercube 73.

> Found 3 clusters in hypercube 74.

> Found 3 clusters in hypercube 75.

> Found 3 clusters in hypercube 76.

Cube_77 is empty.

Cube_78 is empty.

> Found 3 clusters in hypercube 79.

> Found 3 clusters in hypercube 80.

> Found 3 clusters in hypercube 81.

> Found 3 clusters in hypercube 82.

> Found 3 clusters in hypercube 83.

Created 605 edges and 240 nodes in 0:00:01.848595.

Interpretable inverse X¶

Here we show the flexibility of KeplerMapper by creating an interpretable_inverse_X that is easier to interpret by humans.

For text, this can be TFIDF (1-3)-wordgrams, like we do here. For structured data this can be regularitory/protected variables of interest, or using another model to select, say, the top 10% features.

[5]:

vec = TfidfVectorizer(analyzer="word",

strip_accents="unicode",

stop_words="english",

ngram_range=(1,3),

max_df=0.97,

min_df=0.02)

interpretable_inverse_X = vec.fit_transform(X).toarray()

interpretable_inverse_X_names = vec.get_feature_names()

print("SHAPE", interpretable_inverse_X.shape)

print("FEATURE NAMES SAMPLE", interpretable_inverse_X_names[:400])

SHAPE (11314, 947)

FEATURE NAMES SAMPLE ['00', '000', '10', '100', '11', '12', '13', '14', '15', '16', '17', '18', '19', '1992', '1993', '1993apr15', '20', '200', '21', '22', '23', '24', '25', '26', '27', '28', '29', '30', '31', '32', '33', '34', '35', '36', '37', '38', '39', '40', '408', '41', '42', '43', '44', '45', '49', '50', '500', '60', '70', '80', '90', '92', '93', 'able', 'ac', 'ac uk', 'accept', 'access', 'according', 'acs', 'act', 'action', 'actually', 'add', 'address', 'advance', 'advice', 'ago', 'agree', 'air', 'al', 'allow', 'allowed', 'america', 'american', 'andrew', 'answer', 'anti', 'anybody', 'apparently', 'appears', 'apple', 'application', 'apply', 'appreciate', 'appreciated', 'apr', 'apr 1993', 'apr 93', 'april', 'area', 'aren', 'argument', 'article', 'article 1993apr15', 'ask', 'asked', 'asking', 'assume', 'att', 'att com', 'au', 'available', 'average', 'avoid', 'away', 'bad', 'base', 'baseball', 'based', 'basic', 'basically', 'basis', 'bbs', 'believe', 'best', 'better', 'bible', 'big', 'bike', 'bit', 'bitnet', 'black', 'blue', 'board', 'bob', 'body', 'book', 'books', 'bought', 'box', 'break', 'brian', 'bring', 'brought', 'btw', 'build', 'building', 'built', 'bus', 'business', 'buy', 'ca', 'ca lines', 'california', 'called', 'came', 'canada', 'car', 'card', 'cards', 'care', 'carry', 'cars', 'case', 'cases', 'cause', 'cc', 'center', 'certain', 'certainly', 'chance', 'change', 'changed', 'cheap', 'check', 'chicago', 'children', 'chip', 'choice', 'chris', 'christ', 'christian', 'christians', 'church', 'city', 'claim', 'claims', 'class', 'clear', 'clearly', 'cleveland', 'clinton', 'clipper', 'close', 'cmu', 'cmu edu', 'code', 'college', 'color', 'colorado', 'com', 'com organization', 'com writes', 'come', 'comes', 'coming', 'comment', 'comments', 'common', 'communications', 'comp', 'company', 'complete', 'completely', 'computer', 'computer science', 'computing', 'condition', 'consider', 'considered', 'contact', 'continue', 'control', 'copy', 'corp', 'corporation', 'correct', 'cost', 'couldn', 'country', 'couple', 'course', 'court', 'cover', 'create', 'created', 'crime', 'cs', 'cso', 'cso uiuc', 'cso uiuc edu', 'cup', 'current', 'currently', 'cut', 'cwru', 'cwru edu', 'data', 'date', 'dave', 'david', 'day', 'days', 'dead', 'deal', 'death', 'decided', 'defense', 'deleted', 'department', 'dept', 'design', 'designed', 'details', 'development', 'device', 'did', 'didn', 'die', 'difference', 'different', 'difficult', 'directly', 'disclaimer', 'discussion', 'disk', 'display', 'distribution', 'distribution na', 'distribution na lines', 'distribution usa', 'distribution usa lines', 'distribution world', 'distribution world nntp', 'distribution world organization', 'division', 'dod', 'does', 'does know', 'doesn', 'doing', 'don', 'don know', 'don think', 'don want', 'dos', 'doubt', 'dr', 'drive', 'driver', 'drivers', 'early', 'earth', 'easily', 'east', 'easy', 'ed', 'edu', 'edu article', 'edu au', 'edu david', 'edu organization', 'edu organization university', 'edu reply', 'edu subject', 'edu writes', 'effect', 'email', 'encryption', 'end', 'engineering', 'entire', 'error', 'especially', 'evidence', 'exactly', 'example', 'excellent', 'exist', 'exists', 'expect', 'experience', 'explain', 'expressed', 'extra', 'face', 'fact', 'faith', 'family', 'fan', 'faq', 'far', 'fast', 'faster', 'fax', 'federal', 'feel', 'figure', 'file', 'files', 'final', 'finally', 'fine', 'folks', 'follow', 'following', 'force', 'forget', 'form', 'frank', 'free', 'friend', 'ftp', 'future', 'game', 'games', 'gave', 'general', 'generally', 'germany', 'gets', 'getting', 'given', 'gives', 'giving', 'gmt', 'god', 'goes', 'going', 'gone', 'good', 'got', 'gov', 'government', 'graphics', 'great', 'greatly', 'ground', 'group', 'groups', 'guess', 'gun', 'guns', 'guy', 'half', 'hand', 'happen', 'happened', 'happens', 'happy', 'hard', 'hardware', 'haven', 'having', 'head', 'hear', 'heard', 'heart', 'hell']

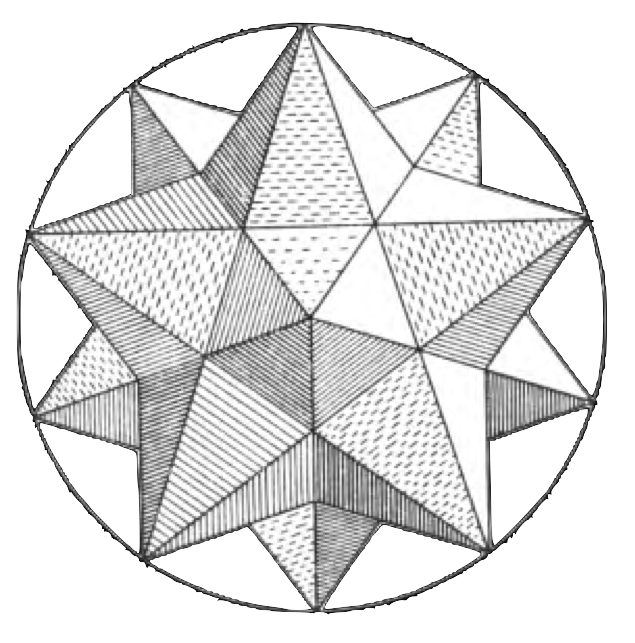

Visualization¶

We use interpretable_inverse_X as the inverse_X during visualization. This way we get cluster statistics that are more informative/interpretable to humans (chargrams vs. wordgrams).

We also pass the projected_X to get cluster statistics for the projection. For custom_tooltips we use a textual description of the label.

The color function is simply the multi-class ground truth represented as a non-negative integer.

[6]:

_ = mapper.visualize(graph,

X=interpretable_inverse_X,

X_names=interpretable_inverse_X_names,

path_html="output/newsgroups20.html",

lens=projected_X,

lens_names=["ISOMAP1", "ISOMAP2"],

title="Newsgroups20: Latent Semantic Char-gram Analysis with Isometric Embedding",

custom_tooltips=np.array([target_names[ys] for ys in y]),

color_values=y,

color_function_name='target')

#jupyter.display("output/newsgroups20.html")

Wrote visualization to: output/newsgroups20.html

scikit-tda/kepler-mapper

scikit-tda/kepler-mapper