Detecting Encrypted TOR Traffic with Boosting and Topological Data Analysis¶

HJ van Veen - MLWave

Last run 2021-10-08 with kmapper version 2.0.1

We establish strong baselines for both supervised and unsupervised detection of encrypted TOR traffic.

Introduction¶

Gradient Boosted Decision Trees (GBDT) is a very powerful learning algorithm for supervised learning on tabular data [1]. Modern implementations include XGBoost [2], Catboost [3], LightGBM [4] and scikit-learn’s GradientBoostingClassifier [5]. Of these, especially XGBoost has seen tremendous successes in machine learning competitions [6], starting with its introduction during the Higgs Boson Detection challenge in 2014 [7]. The success of XGBoost can be explained on multiple dimensions: It is a robust implementation of the original algorithms, it is very fast – allowing data scientists to quickly find better parameters [8], it does not suffer much from overfit, is scale-invariant, and it has an active community providing constant improvements, such as early stopping [9] and GPU support [10].

Anomaly detection algorithms automatically find samples that are different from regular samples. Many methods exist. We use the Isolation Forest in combination with nearest neighbor distances. The Isolation Forest works by randomly splitting up the data [11]. Outliers, on average, are easier to isolate through splitting. Nearest neighbor distance looks at the summed distances for a sample and its five nearest neighbors. Outliers, on average, have a larger distance between their nearest neighbors than regular samples [12].

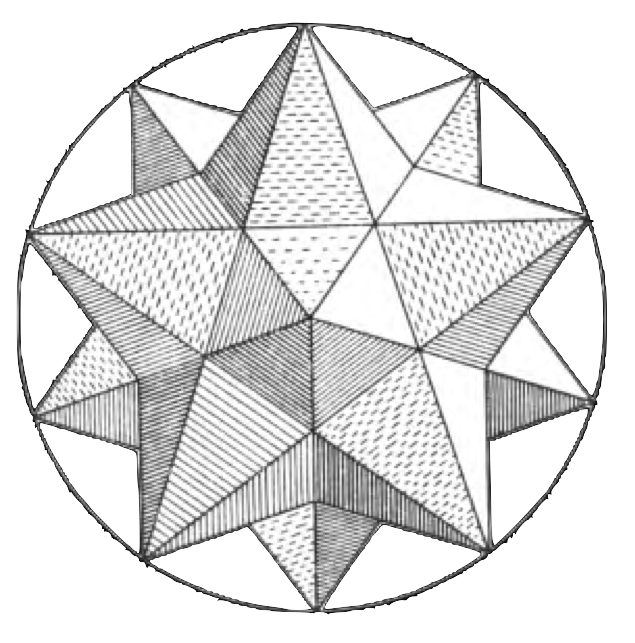

Topological Data Analysis (TDA) is concerned with the meaning, shape, and connectedness of data [13]. Benefits of TDA include: Unsupervised data exploration / automatic hypothesis generation, ability to deal with noise and missing values, invariance, and the generation of meaningful compressed summaries. TDA has shown efficient applications in a number of diverse fields: healthcare [14], computational biology [15], control theory [16], community detection [17], machine learning [18], sports analysis [19], and information security [20]. One tool from TDA is the \(MAPPER\) algorithm. \(MAPPER\) turns data and data projections into a graph by covering it with overlapping intervals and clustering [21]. To guide exploration, the nodes of the graph may be colored with a function of interest [22]. There are an increasing number of implementations of \(MAPPER\). We use the open source implementation KeplerMapper from scikit-TDA [23].

The TOR network allows users to communicate and host content while preserving privacy and anonimity [24]. As such, it can be used by dissidents and other people who prefer not to be tracked by commercial companies or governments. But these strong privacy and anonimity features are also attractive to criminals. A 2016 study in ‘Survival - Global Politics and Strategy’ found at least 57% of TOR websites are involved in illicit behavior, ranging from the trade in illegal arms, counterfeit ID documents, pornography, and drugs, money laundering & credit card fraud, and the sharing of violent material, such as bomb making tutorials and terrorist propaganda [25].

Network Intrusion Detection Systems are a first line of defense for governments and companies [26]. An undetected hacker will try to elevate their priviledges, moving from the weakest link to more hardened system-critical network nodes [27]. If the hacker’s goal is to get access to sensitive data (for instance: for resale -, industrial espionage -, or extortion purposes) then any stolen data needs to be exfiltrated. Similarly, cryptolockers often need to communicate with a command & control server outside the network. Depending on the level of sophistication of the malware or hackers, exfiltration may be open and visible, run encrypted through the TOR network in an effort to hide the destination, or use advanced DNS tunneling techniques.

Motivation¶

Current Network Intrusion Detection Systems, much like the old spam detectors, rely mostly on rules, signatures, and anomaly detection. Labeled data is scarce. Writing rules is a very costly task requiring domain expertise. Signatures may fail to catch new types of attacks until they are updated. Anomalous/unusual behavior is not necessarily suspicous/adversarial behavior.

Machine Learning for Information Security suffers a lot from poor false positive rates. False positives lead to alarm fatigue and can swamp an intelligence analyst with irrelevant work.

Despite the possibility of false positives, it is often better to be safe than sorry. Suspicious network behavior, such as outgoing connections to the TOR network, require immediate attention. A network node can be shut down remotely, after which a security engineer can investigate the machine. The best practice of a multi-layered security makes this possible [28]: Instead of a single firewall to rule them all, hackers can be detected in various stages of their network intrusion, up to the final step of data exfilitration.

Data¶

We use a dataset written for the paper “Characterization of Tor Traffic Using Time Based Features” (Lashkari et al.) [29], graciously provided by the Canadian Institute for Cybersecurity [30]. This dataset combines older research on nonTOR network traffic with more recently captured TOR traffic (both were created on the same network) [31]. The data includes features that are more specific to the network used, such as the source and destination IP/Port, and a range of time-based features with a 10 second lag.

Feature |

Type |

Description |

Time-based |

|---|---|---|---|

‘Source IP’ |

Object |

Source IP4 Address. String with dots. |

No |

‘ Source Port’ |

Float |

Source Port sending packets. |

No |

‘ Destination IP’ |

Object |

Destination IP4 Address. |

No |

‘ Destination Port’ |

Float |

Destination Port receiving packets. |

No |

‘ Protocol’ |

Float |

Integer [5-17] denoting protocol used. |

No |

‘ Flow Duration’ |

Float |

Length of connection in seconds |

Yes |

‘ Flow Bytes/s’ |

Float |

Bytes per seconds send |

Yes |

‘ Flow Packets/s’ |

Object |

Packets per second send.

Contains |

Yes |

‘ Flow IAT Mean’ |

Float |

Flow Inter Arrival Time. |

Yes |

‘ Flow IAT Std’ |

Float |

Yes |

|

‘ Flow IAT Max’ |

Float |

Yes |

|

‘ Flow IAT Min’ |

Float |

Yes |

|

‘Fwd IAT Mean’ |

Float |

Forward Inter Arrival Time. |

Yes |

‘ Fwd IAT Std’ |

Float |

Yes |

|

‘ Fwd IAT Max’ |

Float |

Yes |

|

‘ Fwd IAT Min’ |

Float |

Yes |

|

‘Bwd IAT Mean’ |

Float |

Backwards Inter Arrival Time. |

Yes |

‘ Bwd IAT Std’ |

Float |

Yes |

|

‘ Bwd IAT Max’ |

Float |

Yes |

|

‘ Bwd IAT Min’ |

Float |

Yes |

|

‘Active Mean’ |

Float |

Average amount of time in seconds before connection went idle. |

Yes |

‘ Active Std’ |

Float |

Yes |

|

‘ Active Max’ |

Float |

Yes |

|

‘ Active Min’ |

Float |

Yes |

|

‘Idle Mean’ |

Float |

Average amount of time in seconds before connection became active. |

Yes |

‘ Idle Std’ |

Float |

Zero variance feature. |

Yes |

‘ Idle Max’ |

Float |

Yes |

|

‘ Idle Min’ |

Float |

Yes |

|

‘label’ |

Object |

Either |

Experimental setup¶

Supervised ML. We establish a strong baseline with XGBoost on the full data and on a subset (only time-based features, which generalize better to new domains). We follow the dataset standard of creating a 20% holdout validation set, and use 5-fold stratified cross-validation for parameter tuning [32]. For tuning we use random search on sane parameter ranges, as random search is easy to implement and given enough time, will equal or beat more sophisticated methods [33]. We do not use feature selection, but opt to let our learning algorithm deal with those. Missing values are also handled by XGBoost and not manually imputed or hardcoded.

Unsupervised ML. We use \(MAPPER\) in combination with the Isolation Forest and the summed distances to the five nearest neighbors. We use an overlap percentage of 50% and 40 intervals per dimension for a total of 1600 hypercubes. Clustering is done with agglomerative clustering using the euclidean distance metric and 3 clusters per interval. For these experiments we use only the time-based features. We don’t scale the data, despite only Isolation Forest being scale-invariant.

[1]:

import numpy as np

import pandas as pd

import xgboost

from sklearn import model_selection, metrics

Data Prep¶

The last 39 records in are

SelectedFeatures-10s-TOR-NonTOR.csvlook like data-collection artifacts – we drop them.There are string values

"Infinity"inside the data, causing mixed types.We need to label-encode the target column.

We turn the IP addresses into floats by removing the dots.

We also create a subset of features by removing

Source Port,Source IP,Destination Port,Destination IP, andProtocol. This to avoid overfitting/improve future generalization and focus only on the time-based features, like most other researchers have done.

[2]:

def load_and_prep_data():

# download `SelectedFeatures-10s-TOR-NonTOR.csv` dataset from here:

# * https://www.unb.ca/cic/datasets/tor.html

# * http://205.174.165.80/CICDataset/ISCX-Tor-NonTor-2017/

# * https://github.com/rambasnet/DeepLearning-TorTraffic

df = pd.read_csv("input/SelectedFeatures-10s-TOR-NonTOR.csv")

df.drop(df.index[-39:], inplace=True)

df.replace('Infinity', -1, inplace=True)

df["label"] = df["label"].map({"nonTOR": 0, "TOR": 1})

df["Source IP"] = df["Source IP"].apply(lambda x: float(x.replace(".", "")))

df[" Destination IP"] = df[" Destination IP"].apply(lambda x: float(x.replace(".", "")))

return df

[3]:

df = load_and_prep_data()

[4]:

features_all = [c for c in df.columns if c not in

['label']]

features = [c for c in df.columns if c not in

['Source IP',

' Source Port',

' Destination IP',

' Destination Port',

' Protocol',

'label']]

X = np.array(df[features])

X_all = np.array(df[features_all])

y = np.array(df.label)

print(X.shape, np.mean(y))

(67795, 23) 0.11865181798067705

Local evaluation setup¶

We create a stratified holdout set of 20%. Any modeling choices (such as parameter tuning) are guided by 5-fold stratified cross-validation on the remaining dataset.

[5]:

splitter = model_selection.StratifiedShuffleSplit(

n_splits=1,

test_size=0.2,

random_state=0)

for train_index, test_index in splitter.split(X, y):

X_train, X_holdout = X[train_index], X[test_index]

X_train_all, X_holdout_all = X_all[train_index], X_all[test_index]

y_train, y_holdout = y[train_index], y[test_index]

print(X_train.shape, X_holdout.shape)

(54236, 23) (13559, 23)

5-fold non-tuned XGBoost¶

[6]:

model = xgboost.XGBClassifier(seed=0)

print(model)

skf = model_selection.StratifiedKFold(

n_splits=5,

shuffle=True,

random_state=0)

for i, (train_index, test_index) in enumerate(skf.split(X_train, y_train)):

X_train_fold, X_test_fold = X_train[train_index], X_train[test_index]

y_train_fold, y_test_fold = y_train[train_index], y_train[test_index]

model.fit(X_train_fold, y_train_fold)

probas = model.predict_proba(X_test_fold)[:,1]

preds = (probas > 0.5).astype(int)

print("-"*60)

print("Fold: %d (%s/%s)" %(i, X_train_fold.shape, X_test_fold.shape))

print(metrics.classification_report(y_test_fold, preds, target_names=["nonTOR", "TOR"]))

print("Confusion Matrix: \n%s\n"%metrics.confusion_matrix(y_test_fold, preds))

print("Log loss : %f" % (metrics.log_loss(y_test_fold, probas)))

print("AUC : %f" % (metrics.roc_auc_score(y_test_fold, probas)))

print("Accuracy : %f" % (metrics.accuracy_score(y_test_fold, preds)))

print("Precision: %f" % (metrics.precision_score(y_test_fold, preds)))

print("Recall : %f" % (metrics.recall_score(y_test_fold, preds)))

print("F1-score : %f" % (metrics.f1_score(y_test_fold, preds)))

XGBClassifier(base_score=None, booster=None, colsample_bylevel=None,

colsample_bynode=None, colsample_bytree=None, gamma=None,

gpu_id=None, importance_type='gain', interaction_constraints=None,

learning_rate=None, max_delta_step=None, max_depth=None,

min_child_weight=None, missing=nan, monotone_constraints=None,

n_estimators=100, n_jobs=None, num_parallel_tree=None,

random_state=None, reg_alpha=None, reg_lambda=None,

scale_pos_weight=None, seed=0, subsample=None, tree_method=None,

validate_parameters=None, verbosity=None)

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

[11:54:35] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

------------------------------------------------------------

Fold: 0 ((43388, 23)/(10848, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9561

TOR 0.96 0.96 0.96 1287

accuracy 0.99 10848

macro avg 0.98 0.98 0.98 10848

weighted avg 0.99 0.99 0.99 10848

Confusion Matrix:

[[9513 48]

[ 54 1233]]

Log loss : 0.026506

AUC : 0.998835

Accuracy : 0.990597

Precision: 0.962529

Recall : 0.958042

F1-score : 0.960280

[11:54:41] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 1 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 1.00 0.99 9560

TOR 0.97 0.95 0.96 1287

accuracy 0.99 10847

macro avg 0.98 0.97 0.98 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9521 39]

[ 67 1220]]

Log loss : 0.027315

AUC : 0.998858

Accuracy : 0.990228

Precision: 0.969023

Recall : 0.947941

F1-score : 0.958366

[11:54:47] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 2 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9560

TOR 0.96 0.95 0.95 1287

accuracy 0.99 10847

macro avg 0.98 0.97 0.97 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9508 52]

[ 65 1222]]

Log loss : 0.032290

AUC : 0.997622

Accuracy : 0.989214

Precision: 0.959184

Recall : 0.949495

F1-score : 0.954315

[11:54:53] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 3 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9560

TOR 0.95 0.96 0.96 1287

accuracy 0.99 10847

macro avg 0.97 0.98 0.97 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9500 60]

[ 54 1233]]

Log loss : 0.027810

AUC : 0.998797

Accuracy : 0.989490

Precision: 0.953596

Recall : 0.958042

F1-score : 0.955814

[11:54:59] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 4 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9560

TOR 0.96 0.95 0.96 1287

accuracy 0.99 10847

macro avg 0.98 0.97 0.97 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9508 52]

[ 62 1225]]

Log loss : 0.027349

AUC : 0.998739

Accuracy : 0.989490

Precision: 0.959280

Recall : 0.951826

F1-score : 0.955538

Hyper parameter tuning¶

We found the below parameters by running a random gridsearch on the first fold in ~50 iterations (minimizing log loss). We use an AWS distributed closed-source auto-tuning library called “Cher” with the following parameter ranges:

"XGBClassifier": {

"max_depth": (2,12),

"n_estimators": (20, 2500),

"objective": ["binary:logistic"],

"missing": np.nan,

"gamma": [0, 0, 0, 0, 0, 0.01, 0.1, 0.2, 0.3, 0.5, 1., 10., 100.],

"learning_rate": [0.01, 0.02, 0.03, 0.04, 0.05, 0.06, 0.07, 0.08, 0.09, 0.1, 0.15, 0.2, 0.1 ,0.1],

"min_child_weight": [1, 1, 1, 1, 2, 3, 4, 5, 1, 6, 7, 8, 9, 10, 11, 15, 30, 60, 100, 1, 1, 1],

"max_delta_step": [0, 0, 0, 0, 0, 1, 2, 5, 8],

"nthread": -1,

"subsample": [i/100. for i in range(20,100)],

"colsample_bytree": [i/100. for i in range(20,100)],

"colsample_bylevel": [i/100. for i in range(20,100)],

"reg_alpha": [0, 0, 0, 0, 0, 0.00000001, 0.00000005, 0.0000005, 0.000005],

"reg_lambda": [1, 1, 1, 1, 2, 3, 4, 5, 1],

"scale_pos_weight": 1,

"base_score": 0.5,

"seed": (0,999999)

}

[7]:

model = xgboost.XGBClassifier(base_score=0.5, colsample_bylevel=0.68, colsample_bytree=0.84,

gamma=0.1, learning_rate=0.1, max_delta_step=0, max_depth=11,

min_child_weight=1, missing=np.nan, n_estimators=1122, nthread=-1,

objective='binary:logistic', reg_alpha=0.0, reg_lambda=4,

scale_pos_weight=1, seed=189548, silent=True, subsample=0.98)

5-fold tuned XGBoost¶

[8]:

print(model)

for i, (train_index, test_index) in enumerate(skf.split(X_train, y_train)):

X_train_fold, X_test_fold = X_train[train_index], X_train[test_index]

y_train_fold, y_test_fold = y_train[train_index], y_train[test_index]

model.fit(X_train_fold, y_train_fold)

probas = model.predict_proba(X_test_fold)[:,1]

preds = (probas > 0.5).astype(int)

print("-"*60)

print("Fold: %d (%s/%s)" %(i, X_train_fold.shape, X_test_fold.shape))

print(metrics.classification_report(y_test_fold, preds, target_names=["nonTOR", "TOR"]))

print("Confusion Matrix: \n%s\n"%metrics.confusion_matrix(y_test_fold, preds))

print("Log loss : %f" % (metrics.log_loss(y_test_fold, probas)))

print("AUC : %f" % (metrics.roc_auc_score(y_test_fold, probas)))

print("Accuracy : %f" % (metrics.accuracy_score(y_test_fold, preds)))

print("Precision: %f" % (metrics.precision_score(y_test_fold, preds)))

print("Recall : %f" % (metrics.recall_score(y_test_fold, preds)))

print("F1-score : %f" % (metrics.f1_score(y_test_fold, preds)))

XGBClassifier(base_score=0.5, booster=None, colsample_bylevel=0.68,

colsample_bynode=None, colsample_bytree=0.84, gamma=0.1,

gpu_id=None, importance_type='gain', interaction_constraints=None,

learning_rate=0.1, max_delta_step=0, max_depth=11,

min_child_weight=1, missing=nan, monotone_constraints=None,

n_estimators=1122, n_jobs=None, nthread=-1,

num_parallel_tree=None, random_state=None, reg_alpha=0.0,

reg_lambda=4, scale_pos_weight=1, seed=189548, silent=True,

subsample=0.98, tree_method=None, validate_parameters=None,

verbosity=None)

[11:55:05] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[11:55:05] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 0 ((43388, 23)/(10848, 23))

precision recall f1-score support

nonTOR 1.00 0.99 1.00 9561

TOR 0.96 0.96 0.96 1287

accuracy 0.99 10848

macro avg 0.98 0.98 0.98 10848

weighted avg 0.99 0.99 0.99 10848

Confusion Matrix:

[[9512 49]

[ 46 1241]]

Log loss : 0.027303

AUC : 0.998891

Accuracy : 0.991243

Precision: 0.962016

Recall : 0.964258

F1-score : 0.963135

[11:56:21] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[11:56:21] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 1 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 1.00 1.00 9560

TOR 0.97 0.95 0.96 1287

accuracy 0.99 10847

macro avg 0.98 0.97 0.98 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9528 32]

[ 63 1224]]

Log loss : 0.025370

AUC : 0.999025

Accuracy : 0.991242

Precision: 0.974522

Recall : 0.951049

F1-score : 0.962643

[11:57:36] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[11:57:36] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 2 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9560

TOR 0.96 0.95 0.96 1287

accuracy 0.99 10847

macro avg 0.98 0.97 0.97 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9512 48]

[ 66 1221]]

Log loss : 0.031088

AUC : 0.998214

Accuracy : 0.989490

Precision: 0.962175

Recall : 0.948718

F1-score : 0.955399

[11:58:48] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[11:58:48] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 3 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 0.99 0.99 9560

TOR 0.96 0.96 0.96 1287

accuracy 0.99 10847

macro avg 0.98 0.98 0.98 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9512 48]

[ 55 1232]]

Log loss : 0.025460

AUC : 0.999137

Accuracy : 0.990504

Precision: 0.962500

Recall : 0.957265

F1-score : 0.959875

[11:59:59] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[11:59:59] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

------------------------------------------------------------

Fold: 4 ((43389, 23)/(10847, 23))

precision recall f1-score support

nonTOR 0.99 1.00 1.00 9560

TOR 0.97 0.96 0.97 1287

accuracy 0.99 10847

macro avg 0.98 0.98 0.98 10847

weighted avg 0.99 0.99 0.99 10847

Confusion Matrix:

[[9522 38]

[ 48 1239]]

Log loss : 0.026125

AUC : 0.998922

Accuracy : 0.992072

Precision: 0.970243

Recall : 0.962704

F1-score : 0.966459

Holdout set evaluation¶

[9]:

model.fit(X_train, y_train)

probas = model.predict_proba(X_holdout)[:,1]

preds = (probas > 0.5).astype(int)

print(metrics.classification_report(y_holdout, preds, target_names=["nonTOR", "TOR"]))

print("Confusion Matrix: \n%s\n"%metrics.confusion_matrix(y_holdout, preds))

print("Log loss : %f" % (metrics.log_loss(y_holdout, probas)))

print("AUC : %f" % (metrics.roc_auc_score(y_holdout, probas)))

print("Accuracy : %f" % (metrics.accuracy_score(y_holdout, preds)))

print("Precision: %f" % (metrics.precision_score(y_holdout, preds)))

print("Recall : %f" % (metrics.recall_score(y_holdout, preds)))

print("F1-score : %f" % (metrics.f1_score(y_holdout, preds)))

[12:01:12] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[12:01:12] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

precision recall f1-score support

nonTOR 1.00 1.00 1.00 11950

TOR 0.97 0.97 0.97 1609

accuracy 0.99 13559

macro avg 0.98 0.98 0.98 13559

weighted avg 0.99 0.99 0.99 13559

Confusion Matrix:

[[11909 41]

[ 55 1554]]

Log loss : 0.021121

AUC : 0.999294

Accuracy : 0.992920

Precision: 0.974295

Recall : 0.965817

F1-score : 0.970037

Results¶

Model |

Precision |

Recall |

F1-Score |

|---|---|---|---|

Logistic Regression (Singh et al., 2018) [34] |

0.87 |

0.87 |

0.87 |

SVM (Singh et al., 2018) |

0.9 |

0.9 |

0.9 |

Naïve Bayes (Singh et al., 2018) |

0.91 |

0.6 |

0.7 |

C4.5 Decision Tree + Feature Selection (Lashkari et al., 2017) [29] |

0.948 |

0.934 |

|

Deep Learning (Singh et al., 2018) |

0.95 |

0.95 |

0.95 |

Random Forest (Singh et al., 2018) |

0.96 |

0.96 |

0.96 |

XGBoost + Tuning |

0.974 |

0.977 |

0.976 |

Holdout evaluation with all the available features¶

Using all the features results in near perfect performance, suggesting “leaky” features (These features are not to be used for predictive modeling, but are there for completeness). Nevertheless we show how using all features also results in a strong baseline over previous research.

[10]:

model.fit(X_train_all, y_train)

probas = model.predict_proba(X_holdout_all)[:,1]

preds = (probas > 0.5).astype(int)

print(metrics.classification_report(y_holdout, preds, target_names=["nonTOR", "TOR"]))

print("Confusion Matrix: \n%s\n"%metrics.confusion_matrix(y_holdout, preds))

print("Log loss : %f" % (metrics.log_loss(y_holdout, probas)))

print("AUC : %f" % (metrics.roc_auc_score(y_holdout, probas)))

print("Accuracy : %f" % (metrics.accuracy_score(y_holdout, preds)))

print("Precision: %f" % (metrics.precision_score(y_holdout, preds)))

print("Recall : %f" % (metrics.recall_score(y_holdout, preds)))

[12:02:43] WARNING: ../src/learner.cc:573:

Parameters: { "silent" } might not be used.

This may not be accurate due to some parameters are only used in language bindings but

passed down to XGBoost core. Or some parameters are not used but slip through this

verification. Please open an issue if you find above cases.

[12:02:43] WARNING: ../src/learner.cc:1095: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'binary:logistic' was changed from 'error' to 'logloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

/mnt/c/Users/deargle/projects/kepler-mapper/.venv/lib/python3.6/site-packages/xgboost/sklearn.py:1146: UserWarning: The use of label encoder in XGBClassifier is deprecated and will be removed in a future release. To remove this warning, do the following: 1) Pass option use_label_encoder=False when constructing XGBClassifier object; and 2) Encode your labels (y) as integers starting with 0, i.e. 0, 1, 2, ..., [num_class - 1].

warnings.warn(label_encoder_deprecation_msg, UserWarning)

precision recall f1-score support

nonTOR 1.00 1.00 1.00 11950

TOR 1.00 1.00 1.00 1609

accuracy 1.00 13559

macro avg 1.00 1.00 1.00 13559

weighted avg 1.00 1.00 1.00 13559

Confusion Matrix:

[[11946 4]

[ 0 1609]]

Log loss : 0.000963

AUC : 0.999999

Accuracy : 0.999705

Precision: 0.997520

Recall : 1.000000

Results¶

Model |

Precision |

Recall |

Accuracy |

|---|---|---|---|

ANN (Hodo et al., 2017) [35] |

0.983 |

0.937 |

0.991 |

SVM (Hodo et al., 2017) |

0.79 |

0.67 |

0.94 |

ANN + Feature Selection (Hodo et al., 2017) |

0.998 |

0.988 |

0.998 |

SVM + Feature Selection (Hodo et al., 2017) |

0.8 |

0.984 |

0.881 |

XGBoost + Tuning |

0.999 |

1. |

0.999 |

Topological Data Analysis¶

[11]:

import kmapper as km

import pandas as pd

import numpy as np

from sklearn import ensemble, cluster

df = load_and_prep_data()

features = [c for c in df.columns if c not in

['Source IP',

' Source Port',

' Destination IP',

' Destination Port',

' Protocol',

'label']]

X = np.array(df[features])

y = np.array(df.label)

projector = ensemble.IsolationForest(random_state=0, n_jobs=-1)

projector.fit(X)

lens1 = projector.decision_function(X)

mapper = km.KeplerMapper(verbose=3)

lens2 = mapper.fit_transform(X, projection="knn_distance_5")

lens = np.c_[lens1, lens2]

KeplerMapper(verbose=3)

..Composing projection pipeline of length 1:

Projections: knn_distance_5

Distance matrices: False

Scalers: MinMaxScaler()

..Projecting on data shaped (67795, 23)

..Projecting data using: knn_distance_5

..Scaling with: MinMaxScaler()

[12]:

G = mapper.map(

lens,

X,

cover = km.Cover(n_cubes=20,

perc_overlap=.15),

clusterer=cluster.AgglomerativeClustering(3))

print(f"num nodes: {len(G['nodes'])}")

print(f"num edges: {sum([len(values) for key, values in G['links'].items()])}")

Mapping on data shaped (67795, 23) using lens shaped (67795, 2)

Minimal points in hypercube before clustering: 3

Creating 400 hypercubes.

> Found 3 clusters in hypercube 0.

> Found 3 clusters in hypercube 1.

> Found 3 clusters in hypercube 2.

Cube_3 is empty.

Cube_4 is empty.

> Found 3 clusters in hypercube 5.

Cube_6 is empty.

> Found 3 clusters in hypercube 7.

> Found 3 clusters in hypercube 8.

> Found 3 clusters in hypercube 9.

> Found 3 clusters in hypercube 10.

> Found 3 clusters in hypercube 11.

> Found 3 clusters in hypercube 12.

Cube_13 is empty.

> Found 3 clusters in hypercube 14.

> Found 3 clusters in hypercube 15.

> Found 3 clusters in hypercube 16.

> Found 3 clusters in hypercube 17.

> Found 3 clusters in hypercube 18.

> Found 3 clusters in hypercube 19.

Cube_20 is empty.

Cube_21 is empty.

> Found 3 clusters in hypercube 22.

> Found 3 clusters in hypercube 23.

> Found 3 clusters in hypercube 24.

> Found 3 clusters in hypercube 25.

> Found 3 clusters in hypercube 26.

> Found 3 clusters in hypercube 27.

Cube_28 is empty.

Cube_29 is empty.

> Found 3 clusters in hypercube 30.

> Found 3 clusters in hypercube 31.

> Found 3 clusters in hypercube 32.

> Found 3 clusters in hypercube 33.

Cube_34 is empty.

Cube_35 is empty.

Cube_36 is empty.

Cube_37 is empty.

Cube_38 is empty.

> Found 3 clusters in hypercube 39.

> Found 3 clusters in hypercube 40.

> Found 3 clusters in hypercube 41.

> Found 3 clusters in hypercube 42.

> Found 3 clusters in hypercube 43.

Cube_44 is empty.

Cube_45 is empty.

Cube_46 is empty.

Cube_47 is empty.

> Found 3 clusters in hypercube 48.

> Found 3 clusters in hypercube 49.

> Found 3 clusters in hypercube 50.

> Found 3 clusters in hypercube 51.

Cube_52 is empty.

Cube_53 is empty.

Cube_54 is empty.

Cube_55 is empty.

> Found 3 clusters in hypercube 56.

> Found 3 clusters in hypercube 57.

> Found 3 clusters in hypercube 58.

> Found 3 clusters in hypercube 59.

Cube_60 is empty.

> Found 3 clusters in hypercube 61.

> Found 3 clusters in hypercube 62.

> Found 3 clusters in hypercube 63.

> Found 3 clusters in hypercube 64.

> Found 3 clusters in hypercube 65.

> Found 3 clusters in hypercube 66.

> Found 3 clusters in hypercube 67.

Cube_68 is empty.

Cube_69 is empty.

Cube_70 is empty.

Cube_71 is empty.

> Found 3 clusters in hypercube 72.

> Found 3 clusters in hypercube 73.

> Found 3 clusters in hypercube 74.

> Found 3 clusters in hypercube 75.

> Found 3 clusters in hypercube 76.

Cube_77 is empty.

> Found 3 clusters in hypercube 78.

> Found 3 clusters in hypercube 79.

> Found 3 clusters in hypercube 80.

Cube_81 is empty.

> Found 3 clusters in hypercube 82.

> Found 3 clusters in hypercube 83.

> Found 3 clusters in hypercube 84.

Cube_85 is empty.

> Found 3 clusters in hypercube 86.

> Found 3 clusters in hypercube 87.

Cube_88 is empty.

Cube_89 is empty.

> Found 3 clusters in hypercube 90.

> Found 3 clusters in hypercube 91.

> Found 3 clusters in hypercube 92.

> Found 3 clusters in hypercube 93.

Cube_94 is empty.

> Found 3 clusters in hypercube 95.

> Found 3 clusters in hypercube 96.

> Found 3 clusters in hypercube 97.

Cube_98 is empty.

> Found 3 clusters in hypercube 99.

> Found 3 clusters in hypercube 100.

Cube_101 is empty.

> Found 3 clusters in hypercube 102.

> Found 3 clusters in hypercube 103.

> Found 3 clusters in hypercube 104.

> Found 3 clusters in hypercube 105.

Created 352 edges and 216 nodes in 0:02:01.070359.

num nodes: 216

num edges: 352

[13]:

_ = mapper.visualize(

G,

custom_tooltips=y,

color_values=y,

color_function_name="target",

path_html="output/tor-tda.html",

X=X,

X_names=list(df[features].columns),

lens=lens,

lens_names=["IsolationForest", "KNN-distance 5"],

title="Detecting encrypted Tor Traffic with Isolation Forest and Nearest Neighbor Distance"

)

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide

std_m = np.sqrt((cluster_X_mean - X_mean) ** 2) / X_std

/mnt/c/Users/deargle/projects/kepler-mapper/kmapper/visuals.py:413: RuntimeWarning: invalid value encountered in true_divide